A brief introduction to style transfer

Style transfer is an artistic technique and computational process that enables the fusion of two distinct images, where the content of one image is combined with the style of another, resulting in a new, unique image. This process has become popular in recent years, largely due to advancements in deep learning and artificial neural networks.

At the core of style transfer lies the concept of convolutional neural networks (CNNs), which are used to analyze and extract features from images. In the context of style transfer, there are typically two images involved: the content image, which provides the main subject or scene, and the style image, which contains the artistic style or texture to be applied to the content image.

Style transfer algorithms generally involve three main steps:

- Feature extraction: The CNN is used to extract content and style features from the input images. These features represent the high-level structure and details of the images, respectively.

- Style and content loss computation: Loss functions are defined to measure the difference between the extracted features of the generated image and those of the original content and style images. The objective is to minimize these loss values to ensure that the generated image closely resembles the desired content and style.

- Optimization: Using gradient-based optimization techniques (e.g., backpropagation), the algorithm iteratively updates the generated image to minimize the loss functions. This process continues until a satisfactory level of content and style preservation is achieved in the generated image.

Style transfer has been widely applied in various domains, including art, design, photography, and more. It has also been used to create novel applications, such as transforming photos into the styles of famous painters, generating stylized animations, and even enhancing virtual reality experiences.

A brief history of style transfer

The concept of style transfer has its roots in both art and computer science. While artists have been combining and adapting styles for centuries, the computational aspect of style transfer emerged more recently with the advent of advanced machine learning techniques.

Here is a brief history of style transfer:

- Texture synthesis (1980s-2000s): Early attempts to manipulate images computationally focused on texture synthesis, where a sample texture was used to generate a new texture image. These methods laid the groundwork for later style transfer approaches.

- Non-photorealistic rendering (1990s-2000s): This field aimed to create artistic and stylized renderings of images or 3D models, often drawing inspiration from traditional artistic techniques like painting, drawing, or etching. While not explicitly focusing on style transfer, these techniques provided valuable insights into image manipulation and feature extraction.

- Patch-based methods (2000s): Early style transfer approaches used patch-based methods, which involved matching and transferring patches from the style image to the content image. These methods, however, often produced artifacts and could not consistently capture complex artistic styles.

- Neural Style Transfer (2015): The seminal paper by Gatys et al., titled “A Neural Algorithm of Artistic Style,” introduced a breakthrough approach that used deep learning and convolutional neural networks (CNNs) to perform style transfer. By using pre-trained CNNs and optimizing loss functions related to content and style, the authors demonstrated that their method could successfully combine the content of one image with the style of another.

- Fast style transfer (2016): While the original neural style transfer algorithm produced impressive results, it was computationally expensive and slow. To address this issue, researchers developed methods that used feed-forward networks, such as the paper by Johnson et al., “Perceptual Losses for Real-Time Style Transfer and Super-Resolution.” These methods significantly reduced the time required to generate stylized images.

- Further advancements (2016-present): Researchers have continued to refine and expand upon style transfer techniques. Some notable advancements include arbitrary style transfer (which allows a single model to perform multiple style transfers), multi-style transfer, video style transfer, and domain adaptation techniques that allow for applications beyond 2D images.

Throughout its history, style transfer has evolved from simple texture synthesis to complex deep learning-based approaches, resulting in a powerful tool for creative expression and image manipulation.

A Neural Algorithm of Artistic Style

The paper you’ve provided is titled “A Neural Algorithm of Artistic Style” by Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge. This research paper, published in 2015, is considered groundbreaking as it introduced the concept of neural style transfer, a technique that uses deep learning to transfer the artistic style from one image to another while preserving the content of the original image.

Here are some key details from the paper:

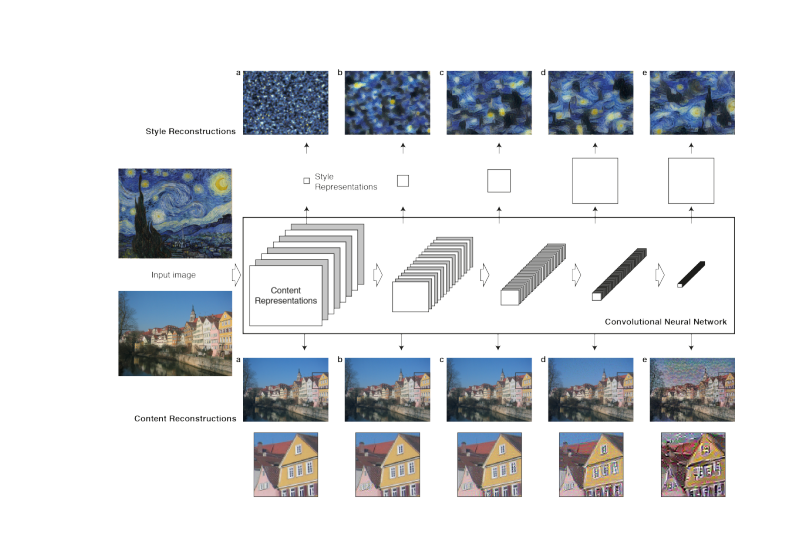

- Convolutional Neural Networks (CNNs): The authors use a pre-trained neural network called VGG-19, a type of CNN specifically designed for image recognition. They demonstrate that this network can be used to generate artistic images by separating and recombining content and style features from different input images.

- Content Representation: To represent the content of an image, the authors use the feature maps from the higher layers of the VGG-19 network. These feature maps capture the high-level information in the image, such as objects and their arrangement, while discarding the detailed pixel information.

- Style Representation: The style of an image is represented using Gram matrices, which capture the correlations between the feature maps from different layers of the VGG-19 network. This representation is able to capture the textures, colors, and patterns in the style image, independent of the content.

- Loss Functions: The authors define two loss functions – content loss and style loss – to guide the optimization process. Content loss measures the difference between the content representations of the original image and the generated image, while style loss measures the difference between the style representations of the style image and the generated image.

- Optimization: The authors use gradient descent to minimize the weighted combination of content loss and style loss. This process iteratively adjusts the pixel values of the generated image until it achieves a balance between content preservation and style transfer.

- Results: The paper presents numerous examples of style transfer, demonstrating that the method is capable of generating visually appealing images that effectively combine the content of one image with the style of another. The authors also explore the effects of using different layers of the VGG-19 network for style representation, showing that the choice of layers can influence the level of abstraction and detail in the generated images.

In summary, the paper “A Neural Algorithm of Artistic Style” introduced the concept of neural style transfer, a technique that uses deep learning to create artistic images by transferring the style from one image to another. The authors demonstrated the effectiveness of this approach by using a pre-trained VGG-19 network and defining content and style loss functions to guide the optimization process, resulting in visually appealing images that combine the content and style of different input images.

A detailed introduction to Convolutional Neural Networks ,vgg-19

Convolutional Neural Networks (CNNs) are a class of deep learning models specifically designed for image recognition and processing. They have been proven to be highly effective in various computer vision tasks, such as image classification, object detection, and segmentation. CNNs are inspired by the structure and function of the human visual cortex and are capable of learning hierarchical patterns and features from images.

A detailed introduction to Convolutional Neural Networks includes the following components:

- Convolutional Layers: The core building block of a CNN is the convolutional layer, which performs convolution operations on the input image or feature maps from previous layers. These operations involve sliding a set of learnable filters (or kernels) over the input, detecting patterns like edges, textures, and shapes. The result of the convolution operation is a set of feature maps that represent the presence of the detected patterns in the input image.

- Activation Functions: After the convolution operation, an activation function, usually the Rectified Linear Unit (ReLU), is applied element-wise to the feature maps. This introduces nonlinearity into the model, allowing it to learn complex, non-linear patterns in the input data.

- Pooling Layers: CNNs often include pooling layers, which reduce the spatial dimensions of the feature maps, making the model more computationally efficient and invariant to small spatial transformations. Common pooling operations include max-pooling and average-pooling, which retain the maximum or average value from a local region of the feature map, respectively.

- Fully Connected Layers: At the end of the CNN, one or more fully connected layers (also called dense layers) are used to combine the high-level features learned by the convolutional and pooling layers. These layers are responsible for making predictions or classifying the input image based on the learned features.

- Softmax and Loss Function: In classification tasks, a softmax activation function is used in the final layer to produce probability scores for each class. During training, a loss function (such as cross-entropy) is used to compare the predicted probabilities with the true labels and update the weights of the network accordingly.

VGG-19 is a specific CNN architecture proposed by Karen Simonyan and Andrew Zisserman in their paper “Very Deep Convolutional Networks for Large-Scale Image Recognition.” VGG-19 consists of 19 weight layers, including 16 convolutional layers and 3 fully connected layers, followed by a softmax layer for classification. The model uses small 3×3 convolutional filters and employs a deep architecture with multiple stacked convolutional layers, which enables it to learn more complex and expressive features from the input images.

VGG-19 is trained on the ImageNet dataset, which contains millions of labeled images from thousands of object categories. Due to its depth and excellent performance on various image recognition tasks, VGG-19 has become a popular choice for transfer learning and as a feature extractor in various computer vision applications, including neural style transfer, as demonstrated in the paper “A Neural Algorithm of Artistic Style” by Gatys et al.

A detailed introduction to Content Representation

Content representation is a crucial aspect of neural style transfer, which is the process of transferring the artistic style from one image to another while preserving the content of the original image. In this context, content representation refers to the method used to capture and represent the high-level information in an image, such as the objects, their shapes, and their arrangement, while ignoring the specific details related to style, such as textures, colors, and patterns.

A detailed introduction to content representation in neural style transfer includes the following components:

- Convolutional Neural Networks (CNNs): In neural style transfer, pre-trained CNNs like VGG-19 are often used for content representation. These networks have been trained on large datasets like ImageNet and have learned to recognize and extract various features from images, making them suitable for extracting content information.

- Feature Maps: Feature maps are the output of convolutional layers in a CNN, which capture the presence of patterns and features detected by the network at different levels of abstraction. Higher layers in the network correspond to more abstract features and better capture the content of the image.

- Content Layers: To represent the content of an image, feature maps from higher layers in the CNN are used. By selecting one or more layers from the CNN, a content representation can be obtained that captures the high-level information in the image. In the case of the VGG-19 network used in the paper “A Neural Algorithm of Artistic Style,” the authors typically use the output of the ‘conv4_2’ layer for content representation.

- Content Loss: To ensure that the generated image preserves the content of the input image, a content loss function is defined. This function measures the difference between the content representation of the input image and that of the generated image. During the optimization process, the content loss is minimized, guiding the neural style transfer algorithm to produce an output image that retains the content of the input image.

In summary, content representation in neural style transfer is the method used to capture and represent the high-level information in an image while ignoring style-related details. By using pre-trained CNNs like VGG-19 and extracting feature maps from higher layers, a content representation can be obtained that effectively captures the objects and their arrangement in the image. The content loss function ensures that the generated image retains the content of the input image during the style transfer process.

A detailed introduction to Style Representation:

Style representation is another critical aspect of neural style transfer, which focuses on capturing and representing the artistic style of an image, such as textures, colors, patterns, and brushstrokes, independent of its content. The goal is to transfer the style from one image to another while preserving the content of the original image.

A detailed introduction to style representation in neural style transfer includes the following components:

- Convolutional Neural Networks (CNNs): Similar to content representation, pre-trained CNNs like VGG-19 are used for style representation. These networks, trained on large datasets like ImageNet, can extract various features from images, including those related to style.

- Feature Maps: Feature maps are the output of convolutional layers in a CNN, representing the presence of patterns and features detected by the network at different levels of abstraction. Lower layers capture more detailed features like textures and patterns, while higher layers capture more abstract features related to style and content.

- Style Layers: To represent the style of an image, feature maps from multiple layers in the CNN are used. By selecting a combination of layers, a style representation can be obtained that captures both local and global style features. In the case of the VGG-19 network used in the paper “A Neural Algorithm of Artistic Style,” the authors typically use the output of layers ‘conv1_1’, ‘conv2_1’, ‘conv3_1’, ‘conv4_1’, and ‘conv5_1’ for style representation.

- Gram Matrices: The style representation of an image is obtained using Gram matrices, which capture the correlations between the feature maps of the selected style layers. By calculating the outer product of the feature maps and normalizing by the total number of elements, the Gram matrices encode information about the patterns, textures, and colors in the style image, independent of the content.

- Style Loss: To ensure that the generated image incorporates the style of the style image, a style loss function is defined. This function measures the difference between the Gram matrices of the style image and the generated image for each selected style layer. During the optimization process, the style loss is minimized, guiding the neural style transfer algorithm to produce an output image that adopts the style of the style image.

In summary, style representation in neural style transfer is the method used to capture and represent the artistic style of an image, focusing on textures, colors, patterns, and brushstrokes. By using pre-trained CNNs like VGG-19, selecting multiple layers, and computing Gram matrices, a style representation can be obtained that effectively captures both local and global style features. The style loss function ensures that the generated image adopts the style of the style i

A detailed introduction to Loss Functions in this paper

In the paper “A Neural Algorithm of Artistic Style” by Gatys et al., loss functions play an essential role in guiding the neural style transfer algorithm to produce a visually appealing output image that effectively combines the content of one image with the style of another. There are two main loss functions used in this paper: content loss and style loss.

- Content Loss: The content loss function measures the difference between the content representation of the input image and that of the generated image. This loss function ensures that the generated image preserves the content of the input image during the style transfer process. Content loss is calculated as the squared error between the feature maps from a selected content layer in the CNN (typically ‘conv4_2’ in VGG-19) for both the input and generated images.

Content Loss = Σ (Fij – Pij)^2, where Fij and Pij are the feature map values at position (i, j) in the selected content layer for the generated and input images, respectively. - Style Loss: The style loss function measures the difference between the style representation of the style image and that of the generated image for each selected style layer. This loss function ensures that the generated image adopts the style of the style image during the style transfer process. Style loss is calculated as the squared error between the Gram matrices for the style image and the generated image across multiple layers in the CNN (typically ‘conv1_1’, ‘conv2_1’, ‘conv3_1’, ‘conv4_1’, and ‘conv5_1’ in VGG-19).

Style Loss = Σ (Gij – Aij)^2, where Gij and Aij are the Gram matrix values at position (i, j) for the generated and style images, respectively.

Both content and style loss functions are combined in a weighted sum to form the total loss, which the algorithm aims to minimize during the optimization process:

Total Loss = α * Content Loss + β * Style Loss

Here, α and β are hyperparameters that determine the relative importance of content and style in the generated image. By adjusting these hyperparameters, the balance between content preservation and style transfer can be controlled.

In summary, the paper “A Neural Algorithm of Artistic Style” uses two main loss functions, content loss and style loss, to guide the neural style transfer algorithm. Content loss ensures that the generated image retains the content of the input image, while style loss ensures that the generated image adopts the style of the style image. By combining these loss functions in a weighted sum, the algorithm can produce visually appealing output images that effectively combine the content and style of different input images.

A detailed introduction to Optimization in this paper

In the paper “A Neural Algorithm of Artistic Style” by Gatys et al., optimization plays a critical role in generating an output image that combines the content of one image with the style of another. The optimization process aims to minimize the total loss, which is a weighted combination of content loss and style loss, through iterative adjustments to the pixel values of the generated image.

A detailed introduction to the optimization process in this paper includes the following components:

- Objective Function: The objective of the optimization process is to minimize the total loss, which is a weighted sum of content loss and style loss. The content loss ensures that the generated image preserves the content of the input image, while the style loss ensures that the generated image adopts the style of the style image.

Total Loss = α * Content Loss + β * Style Loss

Here, α and β are hyperparameters that determine the relative importance of content and style in the generated image. - Initial Image: The optimization process starts with an initial image, which can be either a random noise image or a copy of the input image. The pixel values of this initial image will be iteratively adjusted during the optimization process to minimize the total loss.

- Gradient Descent: The authors use gradient descent, a first-order optimization algorithm, to minimize the total loss. Gradient descent computes the gradients of the loss function with respect to the pixel values of the generated image, indicating the direction in which the pixel values should be adjusted to reduce the loss. The pixel values are then updated using a learning rate, which determines the step size of the adjustments.

Updated pixel values = Current pixel values – (Learning rate * Gradients) - Backpropagation: In order to compute the gradients of the loss function with respect to the pixel values, the authors use backpropagation, a widely used technique in training neural networks. Backpropagation calculates the gradients by applying the chain rule of calculus to the computational graph of the neural network, allowing the gradients to be efficiently computed for all layers in the network.

- Iterations: The optimization process involves multiple iterations of gradient descent and backpropagation, adjusting the pixel values of the generated image until the total loss converges to a minimum value or a maximum number of iterations is reached. The generated image at the end of the optimization process is the output of the neural style transfer algorithm, combining the content of the input image with the style of the style image.

In summary, the paper “A Neural Algorithm of Artistic Style” uses an optimization process based on gradient descent and backpropagation to minimize a weighted combination of content loss and style loss, iteratively adjusting the pixel values of the generated image. This optimization process results in an output image that effectively combines the content of the input image with the style of the style image.

A detailed introduction to Results in this paper

In the paper “A Neural Algorithm of Artistic Style” by Gatys et al., the authors present a series of results demonstrating the effectiveness of their neural style transfer algorithm in combining the content of one image with the style of another. These results showcase the ability of their approach to produce visually appealing and artistically-stylized output images while preserving the content of the input images.

A detailed introduction to the results in this paper includes the following aspects:

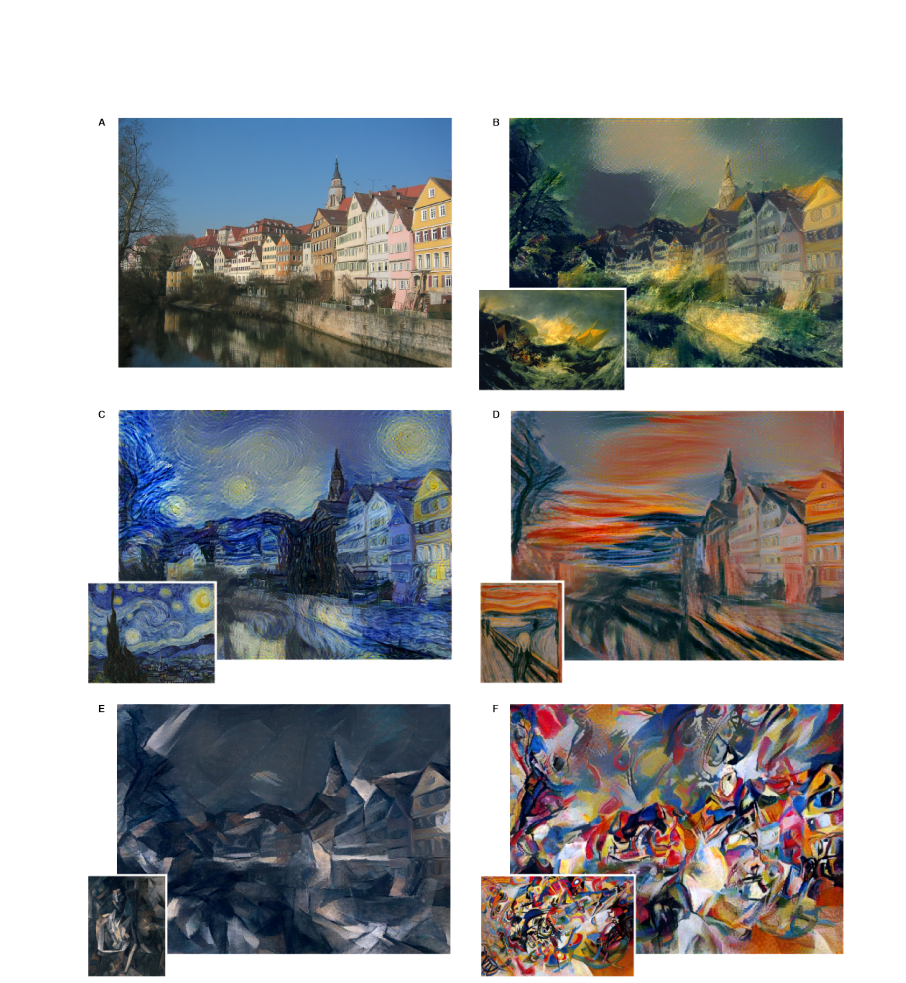

- Artistic Styles: The authors apply various artistic styles to different content images, highlighting the versatility of their neural style transfer algorithm. They use styles from famous paintings like “The Starry Night” by Vincent van Gogh, “The Scream” by Edvard Munch, and “Composition VII” by Wassily Kandinsky, among others.

- Content Preservation: The results show that the content of the input images is preserved effectively in the generated images. This is achieved by minimizing the content loss during the optimization process, which ensures that the high-level information in the input images, such as objects and their arrangement, is retained.

- Style Transfer: The authors demonstrate that their algorithm can effectively transfer the style from the style image to the generated image. This is achieved by minimizing the style loss during the optimization process, which ensures that the textures, colors, patterns, and brushstrokes in the style image are incorporated into the generated image.

- Parameter Settings: The authors present results with different parameter settings for the α and β hyperparameters, which determine the relative importance of content and style in the generated image. By adjusting these hyperparameters, the balance between content preservation and style transfer can be controlled, allowing for a wide range of output images with varying degrees of stylization.

- Comparison to Other Methods: The authors compare their neural style transfer algorithm to other methods like texture synthesis and non-parametric texture transfer, showing that their approach produces more visually appealing and artistically-stylized output images.

- Extensions: The authors also explore extensions of their neural style transfer algorithm, such as applying multiple styles to a single content image or transferring style between different image regions based on semantic segmentation.

conclusion

In summary, This article details introduce style transfer and its history, and describes in detail the opening work- paper “A Neural Algorithm of Artistic Style”,it introduces a groundbreaking neural style transfer algorithm that effectively combines the content of one image with the style of another, producing visually appealing and artistically-stylized output images. The authors achieve this by utilizing pre-trained convolutional neural networks, such as VGG-19, and leveraging content and style loss functions to guide the optimization process.

The results presented in the paper showcase the algorithm’s versatility in transferring various artistic styles while preserving the content of the input images. The authors also explore different parameter settings and extensions, demonstrating the wide range of possible applications for their approach in art, design, and image manipulation. The neural style transfer algorithm introduced in this paper has since become a foundational work in the field, inspiring further research and development of more advanced and efficient style transfer techniques.